| 失效链接处理 |

|

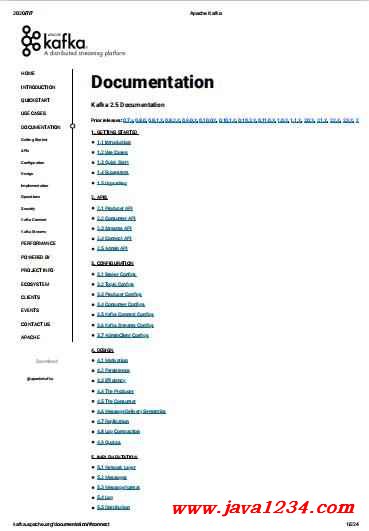

Apache Kafka PDF 下载

本站整理下载:

提取码:frvd

相关截图:

主要内容:

Kafka as a Messaging System

How does Kafka's notion of streams compare to a traditional enterprise messaging system?

Messaging traditionally has two models: queuing and publish-subscribe. In a queue, a pool of consumers may read from a serv

goes to one of them; in publish-subscribe the record is broadcast to all consumers. Each of these two models has a strength a

strength of queuing is that it allows you to divide up the processing of data over multiple consumer instances, which lets you s

Unfortunately, queues aren't multi-subscriber—once one process reads the data it's gone. Publish-subscribe allows you broadc

processes, but has no way of scaling processing since every message goes to every subscriber.

The consumer group concept in Kafka generalizes these two concepts. As with a queue the consumer group allows you to divi

over a collection of processes (the members of the consumer group). As with publish-subscribe, Kafka allows you to broadcas

multiple consumer groups.

The advantage of Kafka's model is that every topic has both these properties—it can scale processing and is also multi-subscr

to choose one or the other.

Kafka has stronger ordering guarantees than a traditional messaging system, too.

A traditional queue retains records in-order on the server, and if multiple consumers consume from the queue then the server h

the order they are stored. However, although the server hands out records in order, the records are delivered asynchronously to

may arrive out of order on different consumers. This effectively means the ordering of the records is lost in the presence of par

Messaging systems often work around this by having a notion of "exclusive consumer" that allows only one process to consum

of course this means that there is no parallelism in processing.

Kafka does it better. By having a notion of parallelism—the partition—within the topics, Kafka is able to provide both ordering gu

balancing over a pool of consumer processes. This is achieved by assigning the partitions in the topic to the consumers in the

that each partition is consumed by exactly one consumer in the group. By doing this we ensure that the consumer is the only re

and consumes the data in order. Since there are many partitions this still balances the load over many consumer instances. No

cannot be more consumer instances in a consumer group than partitions.

Kafka as a Storage System

Any message queue that allows publishing messages decoupled from consuming them is effectively acting as a storage syste

messages. What is different about Kafka is that it is a very good storage system.

Data written to Kafka is written to disk and replicated for fault-tolerance. Kafka allows producers to wait on acknowledgement

considered complete until it is fully replicated and guaranteed to persist even if the server written to fails.

The disk structures Kafka uses scale well—Kafka will perform the same whether you have 50 KB or 50 TB of persistent data on

As a result of taking storage seriously and allowing the clients to control their read position, you can think of Kafka as a kind of

distributed ¦lesystem dedicated to high-performance, low-latency commit log storage, replication, and propagation.

For details about the Kafka's commit log storage and replication design, please read this page.

Kafka for Stream Processing

It isn't enough to just read, write, and store streams of data, the purpose is to enable real-time processing of streams.

In Kafka a stream processor is anything that takes continual streams of data from input topics, performs some processing on

produces continual streams of data to output topics.

For example, a retail application might take in input streams of sales and shipments, and output a stream of reorders and price

computed off this data.

It is possible to do simple processing directly using the producer and consumer APIs. However for more complex transformati

fully integrated Streams API. This allows building applications that do non-trivial processing that compute aggregations off of

streams together.

2020/7/7 Apache Kafka

kafka.apache.org/documentation/#connect 7/224

This facility helps solve the hard problems this type of application faces: handling out-of-order data, reprocessing input as code

performing stateful computations, etc.

The streams API builds on the core primitives Kafka provides: it uses the producer and consumer APIs for input, uses Kafka fo

and uses the same group mechanism for fault tolerance among the stream processor instances.

Putting the Pieces Together

This combination of messaging, storage, and stream processing may seem unusual but it is essential to Kafka's role as a strea

A distributed ¦le system like HDFS allows storing static ¦les for batch processing. Effectively a system like this allows storing

historical data from the past.

A traditional enterprise messaging system allows processing future messages that will arrive after you subscribe. Applications

process future data as it arrives.

Kafka combines both of these capabilities, and the combination is critical both for Kafka usage as a platform for streaming ap

for streaming data pipelines.

By combining storage and low-latency subscriptions, streaming applications can treat both past and future data the same way

application can process historical, stored data but rather than ending when it reaches the last record it can keep processing as

This is a generalized notion of stream processing that subsumes batch processing as well as message-driven applications.

Likewise for streaming data pipelines the combination of subscription to real-time events make it possible to use Kafka for ver

pipelines; but the ability to store data reliably make it possible to use it for critical data where the delivery of data must be guar

integration with o©ine systems that load data only periodically or may go down for extended periods of time for maintenance.

processing facilities make it possible to transform data as it arrives.

For more information on the guarantees, APIs, and capabilities Kafka provides see the rest of the documentation.

1.2 Use Cases

Here is a description of a few of the popular use cases for Apache Kafka®. For an overview of a number of these areas in actio

Messaging

Kafka works well as a replacement for a more traditional message broker. Message brokers are used for a variety of reasons (t

processing from data producers, to buffer unprocessed messages, etc). In comparison to most messaging systems Kafka has

built-in partitioning, replication, and fault-tolerance which makes it a good solution for large scale message processing applica

In our experience messaging uses are often comparatively low-throughput, but may require low end-to-end latency and often d

durability guarantees Kafka provides.

In this domain Kafka is comparable to traditional messaging systems such as ActiveMQ or RabbitMQ. Website Activity Tracking

The original use case for Kafka was to be able to rebuild a user activity tracking pipeline as a set of real-time publish-subscribe

site activity (page views, searches, or other actions users may take) is published to central topics with one topic per activity typ

available for subscription for a range of use cases including real-time processing, real-time monitoring, and loading into Hadoo

warehousing systems for o©ine processing and reporting.

Activity tracking is often very high volume as many activity messages are generated for each user page view.

Metrics

Kafka is often used for operational monitoring data. This involves aggregating statistics from distributed applications to produ

of operational data.

Log Aggregation

2020/7/7 Apache Kafka

kafka.apache.org/documentation/#connect 8/224

Many people use Kafka as a replacement for a log aggregation solution. Log aggregation typically collects physical log ¦les of

them in a central place (a ¦le server or HDFS perhaps) for processing. Kafka abstracts away the details of ¦les and gives a clea

log or event data as a stream of messages. This allows for lower-latency processing and easier support for multiple data sourc

data consumption. In comparison to log-centric systems like Scribe or Flume, Kafka offers equally good performance, stronger

guarantees due to replication, and much lower end-to-end latency.

Stream Processing

Many users of Kafka process data in processing pipelines consisting of multiple stages, where raw input data is consumed fro

then aggregated, enriched, or otherwise transformed into new topics for further consumption or follow-up processing. For exam

pipeline for recommending news articles might crawl article content from RSS feeds and publish it to an "articles" topic; furthe

normalize or deduplicate this content and publish the cleansed article content to a new topic; a ¦nal processing stage might at

this content to users. Such processing pipelines create graphs of real-time data §ows based on the individual topics. Starting i

weight but powerful stream processing library called Kafka Streams is available in Apache Kafka to perform such data process

above. Apart from Kafka Streams, alternative open source stream processing tools include Apache Storm and Apache Samza. Event Sourcing

Event sourcing is a style of application design where state changes are logged as a time-ordered sequence of records. Kafka's

large stored log data makes it an excellent backend for an application built in this style.

Commit Log

Kafka can serve as a kind of external commit-log for a distributed system. The log helps replicate data between nodes and act

mechanism for failed nodes to restore their data. The log compaction feature in Kafka helps support this usage. In this usage K

Apache BookKeeper project.

1.3 Quick Start

This tutorial assumes you are starting fresh and have no existing Kafka or ZooKeeper data. Since Kafka console scripts are dif

and Windows platforms, on Windows platforms use bin\windows\ instead of bin/ , and change the script extension to

Step 1: Download the code

Download the 2.5.0 release and un-tar it.

Step 2: Start the server

Kafka uses ZooKeeper so you need to ¦rst start a ZooKeeper server if you don't already have one. You can use the convenience

with kafka to get a quick-and-dirty single-node ZooKeeper instance.

Now start the Kafka server:

Step 3: Create a topic

Let's create a topic named "test" with a single partition and only one replica:

We can now see that topic if we run the list topic command:

12

> tar -xzf kafka_2.12-2.5.0.tgz

> cd kafka_2.12-2.5.0

123

> bin/zookeeper-server-start.sh config/zookeeper.properties

[2013-04-22 15:01:37,495] INFO Reading configuration from: config/zookeeper.properties (org.apache.zooke

...

1234

> bin/kafka-server-start.sh config/server.properties

[2013-04-22 15:01:47,028] INFO Verifying properties (kafka.utils.VerifiableProperties)

[2013-04-22 15:01:47,051] INFO Property socket.send.buffer.bytes is overridden to 1048576 (kafka.utils.V

...

1 > bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 -

2020/7/7 Apache Kafka

kafka.apache.org/documentation/#connect 9/224

Alternatively, instead of manually creating topics you can also con¦gure your brokers to auto-create topics when a non-existent

to.

Step 4: Send some messages

Kafka comes with a command line client that will take input from a ¦le or from standard input and send it out as messages to t

default, each line will be sent as a separate message.

Run the producer and then type a few messages into the console to send to the server.

Step 5: Start a consumer

Kafka also has a command line consumer that will dump out messages to standard output.

If you have each of the above commands running in a different terminal then you should now be able to type messages into the

and see them appear in the consumer terminal.

All of the command line tools have additional options; running the command with no arguments will display usage information

in more detail.

Step 6: Setting up a multi-broker cluster

So far we have been running against a single broker, but that's no fun. For Kafka, a single broker is just a cluster of size one, so

changes other than starting a few more broker instances. But just to get feel for it, let's expand our cluster to three nodes (still

machine).

First we make a con¦g ¦le for each of the brokers (on Windows use the copy command instead):

Now edit these new ¦les and set the following properties:

The broker.id property is the unique and permanent name of each node in the cluster. We have to override the port and log

because we are running these all on the same machine and we want to keep the brokers from all trying to register on the same

each other's data.

We already have Zookeeper and our single node started, so we just need to start the two new nodes:

Now create a new topic with a replication factor of three:

Okay but now that we have a cluster how can we know which broker is doing what? To see that run the "describe topics" comm

12

> bin/kafka-topics.sh --list --bootstrap-server localhost:9092

test

123

> bin/kafka-console-producer.sh --bootstrap-server localhost:9092 --topic test

This is a message

This is another message

123

> bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --from-beginning

This is a message

This is another message

12

> cp config/server.properties config/server-1.properties

> cp config/server.properties config/server-2.properties

123456789

config/server-1.properties:

broker.id=1

listeners=PLAINTEXT://:9093

log.dirs=/tmp/kafka-logs-1

config/server-2.properties:

broker.id=2

listeners=PLAINTEXT://:9094

log.dirs=/tmp/kafka-logs-2

1234

> bin/kafka-server-start.sh config/server-1.properties &

...

> bin/kafka-server-start.sh config/server-2.properties &

...

1 > bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 3 --partitions 1 -

2020/7/7 Apache Kafka

kafka.apache.org/documentation/#connect 10/224

Here is an explanation of output. The ¦rst line gives a summary of all the partitions, each additional line gives information abou

we have only one partition for this topic there is only one line.

"leader" is the node responsible for all reads and writes for the given partition. Each node will be the leader for a randomly s

partitions.

"replicas" is the list of nodes that replicate the log for this partition regardless of whether they are the leader or even if they a

"isr" is the set of "in-sync" replicas. This is the subset of the replicas list that is currently alive and caught-up to the leader.

Note that in my example node 1 is the leader for the only partition of the topic.

We can run the same command on the original topic we created to see where it is:

So there is no surprise there—the original topic has no replicas and is on server 0, the only server in our cluster when we create

Let's publish a few messages to our new topic:

Now let's consume these messages:

Now let's test out fault-tolerance. Broker 1 was acting as the leader so let's kill it:

|

苏公网安备 32061202001004号

苏公网安备 32061202001004号